Abstracts presentations Chemometrics Symposium 2016

Rudolf Kessler

“Perspectives in Process Analysis: Remarks on Robustness of Spectroscopic Methods for Industrial Applications".

Prof. Dr. Rudolf W. Kessler

Steinbeis Technology Transfer Centre Process Control and Data Analysis,

Kaiserstr. 66, 72764 Reutlingen, Germany

Reutlingen University, Process Analysis & Technology, PA&T,

contact:

Intelligent manufacturing has attracted enormous interest in recent years, not least because of the Process Analytical Technology/Quality by Design platform of the FDA and now also by the initiative Industrie 4.0. The future of industrial automation will be “arbitrarily modifiable and expandable (flexible), connect arbitrary components of multiple producers (networked), enabling its components to perform tasks related to its context independently (self-organizational) and emphasizes ease of use (user-oriented)”. Optical spectroscopy will play a major role in the sensor technology as it provides simultaneously chemical and morphological information [1].

The sensitivity of an analyte can be described by the quantum mechanical cross sections of the molecule which are the effective area that governs the probability of an event of e.g. elastic scattering, or absorption, or emission (e.g. fluorescence or Raman) of a photon at a specified wavelength. Another very important criterion for an analytical method is its capability to deliver signals that are free from interferences and give “true results” and is called selectivity resp. specificity. The responses are based on interactions usually evaluated in a mathematical domain by e.g. chemometrics, giving what has been called “computational selectivity”. Besides these key elements, many other aspects must also be taken into account to ensure robustness of the inline measurement [2].

The paper will discuss the advantages and disadvantages of the spectroscopic inline techniques. Examples demonstrate robust optical set ups in different industrial applications, e.g. biotechnology, pharmacy and manufacturing industry. It illustrates the opportunity to receive robust spectroscopic information with causal relations to the target value. It is important to emphasize, that inline quality control by spectroscopic techniques is a holistic approach. Process chemists, process engineers, chemometricians, and many other technologists must work together where multimodality will be a bedrock supporting the production of smart materials in smart factories.

[1] R. W. Kessler, Perspectives in Process Analysis. J. Chemometrics, 2013, 27: 369–378. doi: 10.1002/cem.2549

[2] R. W. Kessler, W. Kessler and E. Zikulnig-Rusch, A Critical Summary of Spectroscopic Techniques and their Robustness in Industrial PAT Applications, Chem. Ing. Tech. 2016, 88, No. 6, 710–721

Jan Gerretzen

“A novel and effective way for preprocessing selection based on Design of Experiments”.

Jan Gerretzen1,2,3, Ewa Szymańska1,2, Jacob Bart3, Tony Davies3, Henk-Jan van Manen3, Edwin van den Heuvel4, Jeroen Jansen1 and Lutgarde Buydens1

1Radboud University, Institute for Molecules and Materials, Heyendaalseweg 135, 6525 AJ Nijmegen, The Netherlands

2TI-COAST, Science Park 904, 1098 XH Amsterdam, The Netherlands

3AkzoNobel, Supply Chain, Research & Development, Zutphenseweg 10, 7418 AJ Deventer, The Netherlands

4Eindhoven University of Technology, Groene Loper 5, 5600 RM Eindhoven, The Netherlands

The aim of data preprocessing is to remove data artifacts—such as a baseline, scatter effects or noise—from the data and to enhance the analytically relevant information. Many preprocessing methods exist to do either or both, but it is not at all clear on beforehand which preprocessing methods should optimally be used. Appropriate preprocessing selection is a very important aspect in data analysis, since we have shown that multivariate model performance is often heavily influenced by preprocessing [1].

Current preprocessing selection approaches, however, are time consuming and/or based on arbitrary choices that do not necessarily improve the model [1]. It is thus highly desired to come up with a novel, generic preprocessing selection procedure that leads to a proper preprocessing within reasonable time. In this presentation, a novel and simple preprocessing selection approach will be presented, based on Design of Experiments (DoE) and Partial Least Squares (PLS) [2]. It will be shown that preprocessing selected using this approach improves both improves model performance and model interpretability. The latter will be illustrated using variable selection. The focus of the presentation will be on spectroscopic data.

This work is part of the ‘Analysis of Large data sets By Enhanced Robust Techniques’ project (ALBERT), funded by TI-COAST, which aims to develop generic strategies and methods to facilitate better and more robust chemometric and statistical analyses of complex analytical data.

Acknowledgement: This research received funding from the Netherlands Organization for Scientific Research (NWO) in the framework of Technology Area COAST.

References:

[1] J. Engel, et al. Trends in Anal. Chem., 50, 96 (2013)

[2] J. Gerretzen, et al., Anal. Chem., 87, 12096 (2015)

Andrei Barcaru

“Thinking Bayes: creating new ways of interpreting GCxGC(-MS) data”.

Nowadays, Bayesian statistics becomes increasingly popular in application fields as diverse as psychometrics, geophysics, sociology, medicine and (last but not least) analytical chemistry. The objectivity and robustness of Bayesian analysis made it an important tool in the WW II. Nowadays it has become the mathematical representation of how our brain works [1].

In the CHROMAMETRICS project, we used Bayesian statistics to create powerful and robust algorithms for data processing originated by chromatographic and mass spectrometry instrumentation. Some useful applications developed within the CHROMAMETRICS project based on Bayesian paradigm are going to be discussed in this communication. For example, Bayesian statistics has been applied to peak tracking in two-dimensional gas chromatography. Peak tracking refers to the matching of two peak tables. When a sample is analyzed in two different conditions (different inlet pressure and/or temperature program), the goal is to match the compounds from one chromatogram with those in the other. [2] The application of Bayesian analysis to this problem results in a paradigm shift compared to the way traditional peak matching algorithms work. Instead of a single (deterministic) answer, a collection of answers about possible peak matching results is achieved, with a probability assigned for each peak assignation. Another example is the comparison of two samples analyzed in GCxGC-MS, to find meaningful differences among them. In this case, the objective is to find compounds that are present in one sample but absent in the other. The application of Jensen-Shannon divergence within a Bayesian framework proved to give meaningful results, as well as robust against peak misalignments. [3]

In this communication, emphasis is given in the paradigm shift that using Bayesian analysis supposes, against traditional data analysis methods. Moreover, the methods developed in this project integrate different tools from different fields such as information technology and image analysis within the Bayesian framework.

[1] S. B. McGrayne, The Theory That Would Not Die: How Bayes’ Rule Cracked the Enigma Code, Hunted Down Russian Submarines, and Emerged Triumphant from Two Centuries of Controversy., Yale University Press, 2011.

[2] A. Barcaru, E. Derks and G. Vivó-Truyols, "Bayesian peak tracking: a novel probabilistic approach to match GCxGC chromatograms," Anal. Chim. Acta, 2016.

[3] A. Barcaru and G. Vivó-Truyols, "Use of Bayesian Statistics for Pairwise Comparison of Megavariate Data Sets: Extracting Meaningful Differences between GCxGC-MS Chromatograms Using Jensen–Shannon Divergence," Anal. Chem., no. 88 (4), p. 2096–2104, 2016.

Cyril Ruckebusch

“Chemometric analysis of chemical images ”.

Cyril Ruckebusch, Université de Lille, LASIR CNRS UMR 8516, F-59000 Lille, France

Chemical imaging aims to create a visual image of the different chemical compounds in a sample. This can be achieved by simultaneous measurement of spatial, spectral and/or temporal information. Image processing is required to obtain information regarding the mapping or distribution of these compounds in a biological or chemical image. In this talk, we will present our recent efforts to process hyperspectral images and super-resolution wide-field fluorescence nanoscopy data. We will show how the results obtained can be relevant to analyze other types of imaging modalities.

Olga Lushchikova

“Development of alarm system for water quality control based on flow cytometry data of algal populations”.

Olga Lushchikovaab, Gerjen Tinneveltab, Arnold Veenc, Jeroen Jansenb

aTI-COAST, Science Park 904, 1098 XH Amsterdam, The Netherlands

bRadboud University, Institute for Molecules and Materials, (Analytical Chemistry), P.O. Box 9010, 6500 GL Nijmegen, The Netherlands

cRijkswaterstaat, Zuiderwagenplein 2, 8224 AD Lelystad, The Nederlands

Rijkswaterstaat (RWS) applied flow cytometry for the on-line biomonitoring of algal cells in the river water to perform an accurate and fast water quality control. This biomonitoring is based on the measurement of optical properties of every single cell, that lead to a large amount of data. This is why, we developed a dedicated chemometric method, which is called DAMACY, to analyse the spectroscopic data. DAMACY is a multivariate method, which first describes the measured fingerprints of the algal communities. Secondly, this method predicts the normal environmental conditions such as the water temperature or day length. If the predicted natural factor shows large deviation, the sample can be examined more carefully to find the non-natural cause. Previously the results were obtained using offline methods, such as microscopy. Because of this procedure the monitoring could not be frequent whereupon the small water pollutions were hidden. This warning system will detect specific water pollution events early and robustly.

Ewoud van Velzen

"Robustness: A key parameter in the validation of multivariate sensory regression models”.

E.J.J. van Velzen1, S. Lam2, E. Saccenti2, A.K. Smilde2, E. Tareilus1, J.P.M. van Duynhoven1, D.M. Jacobs1

1Unilever Research, Microbiology & Analytical, Olivier van Noortlaan 120, Vlaardingen, The Netherlands

2Universiteit van Amsterdam, Biosystems Data Analysis, Science Park 904, Amsterdam, The Netherlands

International guidelines define robustness of an analytical procedure as a measure of its capacity to remain unaffected by small variations in experimental conditions [1]. Robustness is generally not considered in validation protocols even though it provides an important indicator of the fitness of an analytical procedure during normal use. Also in the validation of multivariate sensory regression models robustness is seldom included. Particularly in “Sensoromics” analysis, where multivariate relationships are assessed between descriptive (consumer) data and compositional (chemical) data, we state that a measure of robustness is a critical parameter for establishing model reliability and model suitability.

|

|

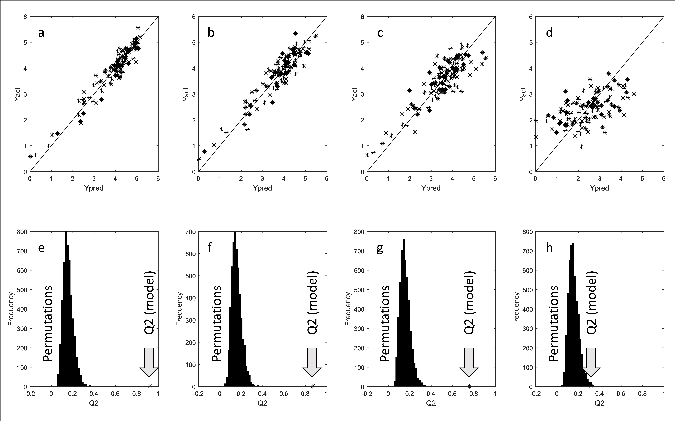

Figure 1. Actual versus predicted sensory scores obtained from a statistically significant PLS model (a-d). Increasing levels of artificial noise were added. As a result the statistical significance of the model dropped. This is demonstrated by the Q2-values in a permutation test (e-h). |

We demonstrated the importance of robustness testing in a sensory study in which the chemical compositions of natural food products was associated with the descriptive scores of a trained consumer panel. This multivariate association was modelled by means of PLS. The data was rather complex as (i) the predictor set was compiled of various analytical datasets with different error structures, and (ii) the predictor set contained missing data. Furthermore, the data was sparse given the enormous sample diversity in the training set. By integration of predefined noise simulations in the double cross-validation and the permutation testing [2], estimates of the relevant model statistics (e.g. Q2-values) could be obtained (Figure 1). The aim of this approach is that the susceptibility of the multivariate models against experimental errors can be tested. We found that robustness testing is of great additional value in the validation of multivariate prediction models. An important prerequisite, however, is that the error structures of the dependent and independent variables should be well known in advance.

References:

[1] Y. vander Heyden et al., J Pharm Biomed Anal., 5-6, 723-753 (2001).

[2] J.A. Westerhuis et al., Metabolomics, 4, 1, 81-89 (2008).